How Graphics Evolved from 8-bit to Modern Realism

18 October 2025

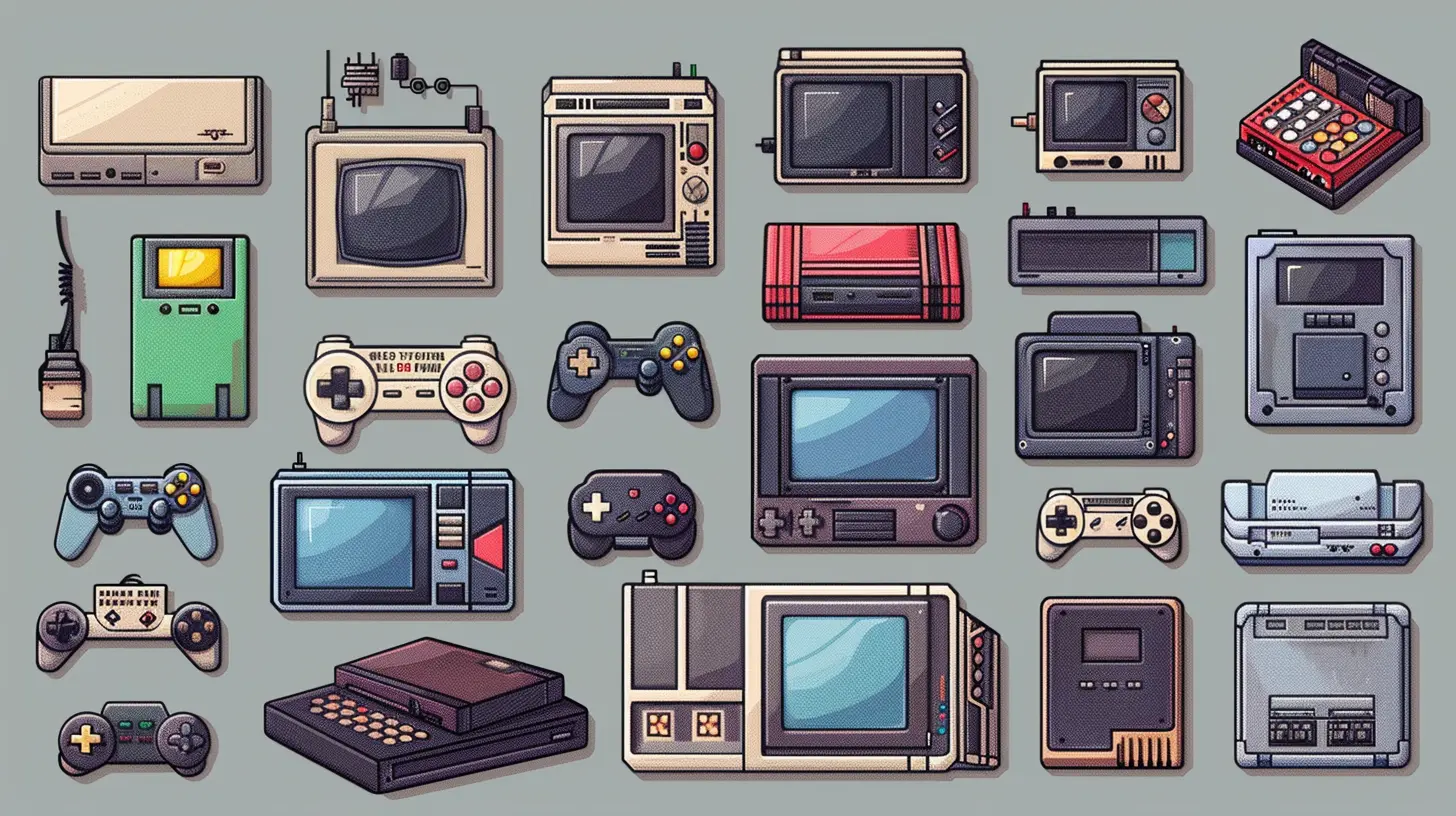

If you’re a gamer, you’ve probably caught yourself staring at the screen and wondering, "How in the actual pixel did we get here?" I mean, how did we go from blocky plumbers jumping on turtles to lifelike dragons breathing fire with photorealistic fury?

Buckle up, fellow pixel-pusher. We're going to take a wild ride through time and tech, from the humble beginnings of chunky 8-bit graphics to the hyper-detailed visuals that blur the line between game and reality.

The 8-bit Era: Birth of the Pixel Playground

Ah, the good ol' days—the sweet, sweet days of 8-bit graphics. We’re talking Super Mario Bros, The Legend of Zelda, and Metroid. These games looked like mosaic art made with digital Lego blocks, yet they sparked imaginations around the globe.Back then, hardware was super limited. The NES (Nintendo Entertainment System) could only handle 256x240 resolution, and only a handful of colors could be shown on-screen at once. Characters looked more like abstract paintings than people, but that pixelated charm? Legendary.

Why 8-bit?

It all comes down to computing power. "8-bit" refers to the size of the data units the system could process. It could handle 256 values (2^8), which meant less memory and fewer colors. So, developers had to get creative—every pixel, every color had to count.

16-bit to 32-bit: A Splash of Color and Detail

Fast forward a few years, and boom—enter the 16-bit era. Say hello to the SNES and Sega Genesis. Suddenly, sprites had more depth, animations were smoother, and soundtracks became less beep-boop and more boom-bap. That's the magic of more bits.Games like “Chrono Trigger,” “Sonic the Hedgehog,” and “Street Fighter II” exploded with personality. The 16-bit systems could display up to 32,768 colors compared to the NES’s 52. Yeah, that’s a huge leap.

Then came the 32-bit revolution. Cue the PlayStation and Sega Saturn. 2D was still around, but now developers flirted with a curious new beast: 3D.

Remember Tomb Raider?

Lara Croft’s pointy-polygonal figure was a glimpse into the future. It was crude, sure—but it was 3D. That was enough to blow minds back then.

The Jump to 3D: Welcome to the Third Dimension

This was where the real magic—and confusion—began. 3D gaming was like trying to walk when you’ve only ever crawled. Game devs were figuring things out on the fly. Control schemes? Wonky. Camera angles? Chaotic. But the ambition? Through the roof.Why the shift to 3D?

Well, hardware got stronger. GPUs (Graphics Processing Units) started to become a thing. Consoles like the PlayStation 2 and Nintendo 64 gave developers tools to render full 3D environments.

Games like “The Legend of Zelda: Ocarina of Time” and “Metal Gear Solid” didn’t just tell stories—they let you live them. Sure, textures were muddy and models were clunky, but the immersion was undeniable.

Early 2000s: The Rise of Real-Time Rendering

The dawn of the 21st century brought serious horsepower to the gaming table. Nvidia and ATI (now AMD) started launching dedicated graphics cards with more VRAM (Video RAM), better shaders, and higher rendering capabilities.Consoles? They didn't lag behind. The Xbox and PlayStation 2 began rendering complex 3D environments with better lighting, fog, and even ragdoll physics. Games like “Halo: Combat Evolved,” “GTA: Vice City,” and “Shadow of the Colossus” set a new standard.

Textures got sharper, character models became more anatomically correct (goodbye triangle boobs), and facial animations started emerging. Developers also started experimenting with dynamic lighting and bump mapping—tricks that added depth without crushing performance.

HD Revolution: When Games Got a Makeover

Around 2005-2006, everything changed—because high definition (HD) swept through gaming like a graphical tsunami.The Xbox 360 and PS3 were ready to push detailed textures at resolutions of 720p and 1080p. Suddenly, you could see pores on faces, wrinkles in leather jackets, and reflections dancing on puddles. It was like watching a movie, only you were in control.

Take a game like “Uncharted.” Nathan Drake’s animation, the environments, the lighting—it felt cinematic. This era also introduced motion capture—where actors’ facial and body movements were recorded to make characters move more realistically.

3D didn’t just look better. It felt better.

The Modern Age: Photorealism, Ray Tracing & Beyond

Cue the PlayStation 5, Xbox Series X, and insane gaming PCs that could probably launch a rocket.We’re now living in the age of photorealistic detail. I mean, seriously—did you see “Cyberpunk 2077” or “Red Dead Redemption 2” on ultra settings? We’re talking pores, reflections, cloth physics, dust particles in sunlight, and hair that reacts to wind direction.

How did we get here?

Two words: ray tracing. This tech mimics how light works in real life. Shadows? Perfectly cast. Reflections? Mirror-like. Ambient lighting? Jaw-droppingly gorgeous.

Add to that neural networks, AI upscaling (like Nvidia's DLSS), and advanced procedural generation, and you’ve got games that look dangerously close to real life.

The Role of Game Engines: The Real MVPs

Behind every beautiful game is a powerful game engine. These are the tools that developers use to build, render, and animate everything you see.- Unreal Engine 5: This beast brings features like Nanite (for insane detail without loss of performance) and Lumen (realistic lighting).

- Unity: Widely used for indie games and mobile games with impressive visuals.

- Frostbite, RE Engine, Decima—they all bring unique advantages to the table.

These engines are now so advanced that even movie studios are using them for cinematic effects. Yeah—games are influencing Hollywood now.

The Future: What’s Next?

So where do we go from here? If we’re already kissing the edges of reality, what could possibly be next?Well, strap in:

- Photogrammetry: Turning real-world photos into in-game assets. Want an actual tree from Yosemite in your forest scene? Done.

- Volumetric rendering: For hyper-realistic fog, smoke, and clouds.

- AI-generated environments: Imagine a game world that builds itself as you play.

- Holographic and AR gaming: Pokémon GO was just the start.

And let’s not forget Neural Rendering—where AI fills in missing graphical details to enhance realism in real-time. The possibilities are endless.

Why Graphics Matter (More Than You Think)

Now, I’ll be the first to say gameplay > graphics. A pixel-perfect game with lousy mechanics is still a lousy game. But good visuals? They enhance immersion, tell stories, and make us feel things.Graphics aren’t just eye candy—they're emotional anchors. The shimmer of light on water in “The Witcher 3,” the snow crunch underfoot in “God of War,” the neon haze in “Cyberpunk 2077”—these visuals pull you into the world.

Remember the first time you stepped into a fully 3D world? It felt like stepping into the future.

Looking Back with Love

Sure, today’s games are jaw-droppingly gorgeous, but there’s something nostalgically beautiful about the blocky, janky, low-res worlds of the past. Those 8-bit and 16-bit titles didn’t have fancy lighting or lifelike animations—but they had charm, personality, and soul.It’s like flipping through an old photo album. You remember every corner, every jump, every pixel. It’s proof that greatness isn’t always about how real something looks—it’s about how deeply it pulls you in.

Final Thoughts: From Pixels to Reality

From the earliest square-headed heroes to the ultra-detailed warriors of today, the journey of video game graphics is nothing short of astonishing.We’ve gone from pixels to photorealism, and yet, the heart of gaming remains unchanged—it’s about storytelling, immersion, and fun. Graphics are just the brushstrokes that make the canvas come alive.

So next time you boot up your console or rig, take a second to marvel—not just at how far we’ve come, but how much further we still have to go.

The evolution of gaming graphics isn’t over. Not even close.

all images in this post were generated using AI tools

Category:

Gaming HistoryAuthor:

Brianna Reyes

Discussion

rate this article

1 comments

Oriana McKeehan

Great article! It’s fascinating to see how far graphics have come from the charming simplicity of 8-bit designs to today’s stunning realism. The evolution reflects both technological advancements and creative artistry, making gaming more immersive than ever. Thank you for this insightful overview!

October 20, 2025 at 2:26 PM

Brianna Reyes

Thank you for your thoughtful comment! I'm glad you enjoyed the article and appreciate the journey of graphics evolution!